Sensitive data control: Industries like healthcare, finance, and defense must comply with strict data protection regulations (e.g., HIPAA, GDPR).

On-premises data sovereignty: Guarantees data does not leave the physical or jurisdictional boundaries required by law or policy.

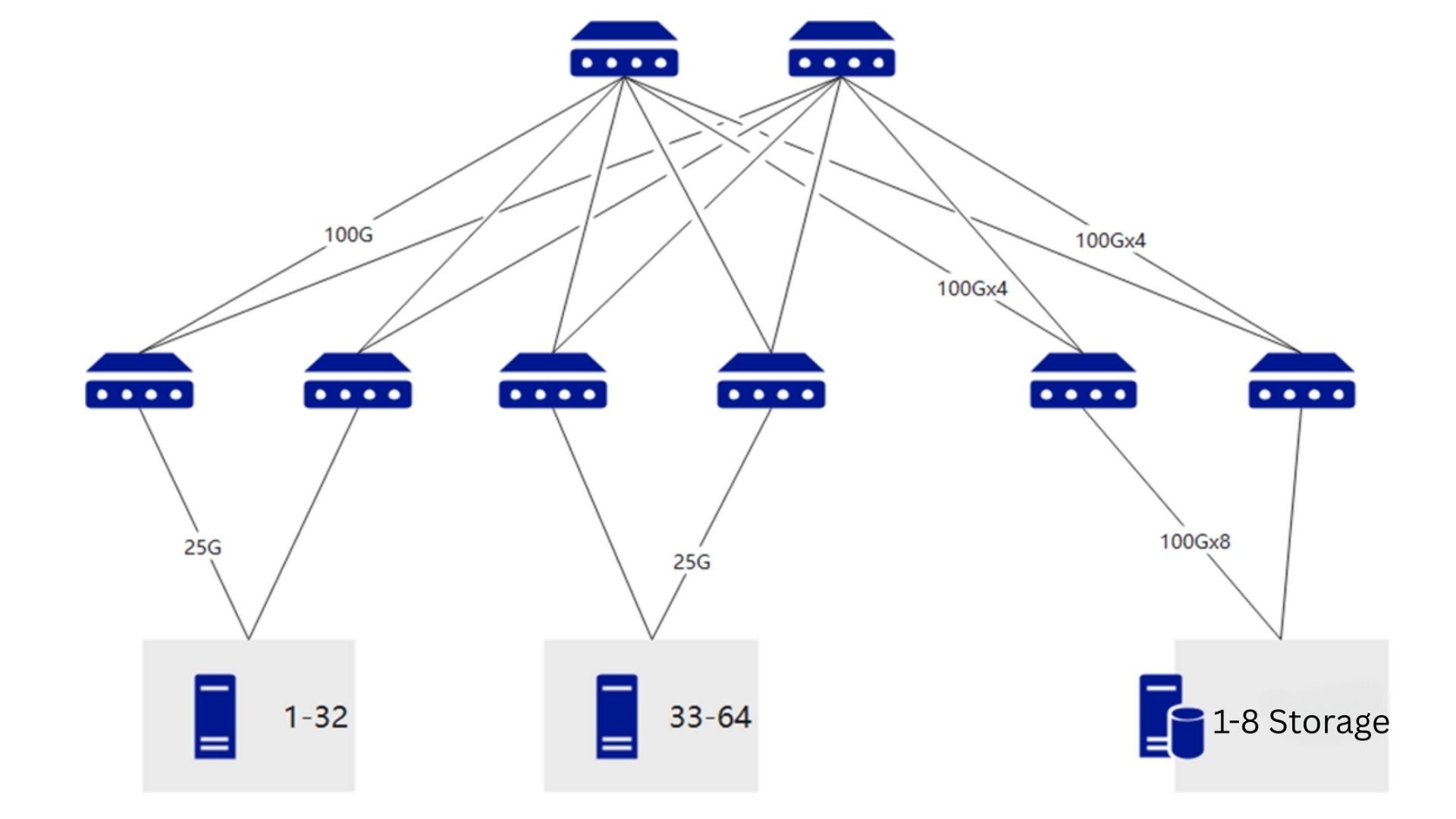

Low latency & high throughput: Tailor the network (e.g., Infiniband), storage (e.g., NVMe over Fabrics), and compute architecture (e.g., GPU topology) for specific AI workloads.

Local caching: Reduced latency for repeated training runs and fine-tuning jobs.

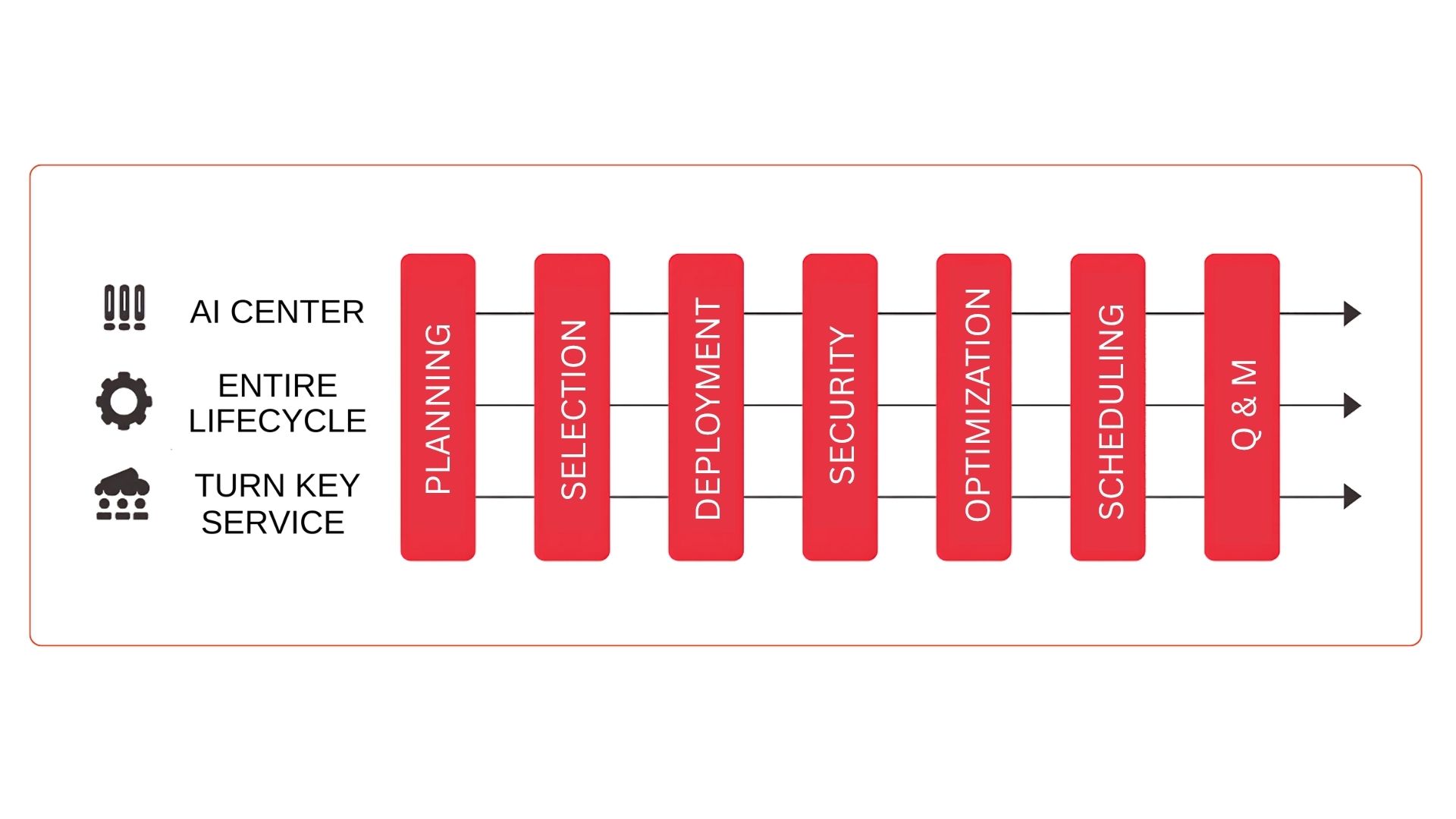

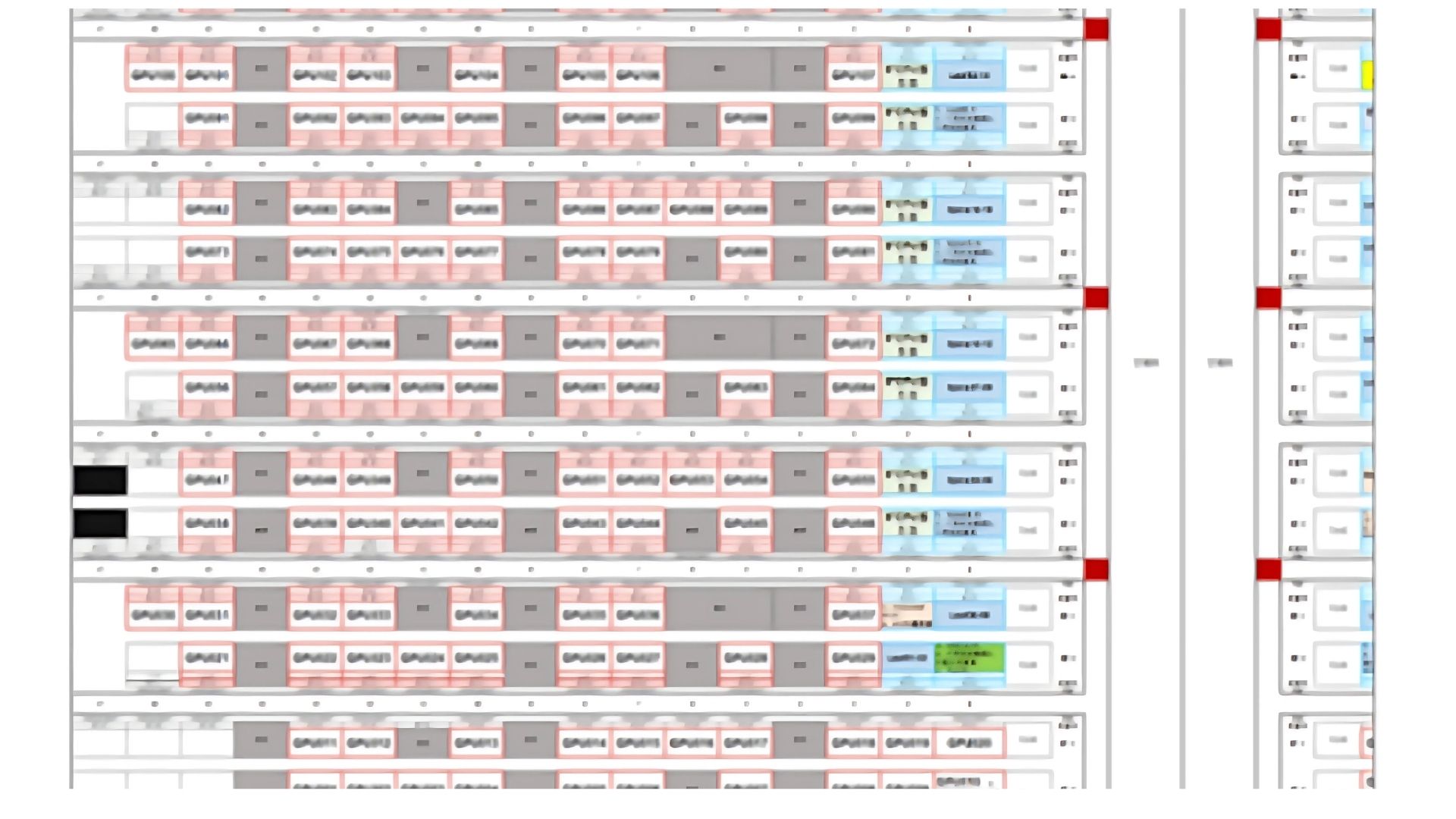

Custom scheduling: Full control over job prioritization, GPU reservation, and multi-user orchestration.

Hardware selection: Choose optimal GPUs, CPU/GPU ratios, memory, storage, and interconnects.

Software stack control: Use preferred frameworks, libraries, OS, Kubernetes/Docker versions, or even build from source.

Support for Proprietary Models & Workflows

Low latency & high throughput: Tailor the network (e.g., Infiniband), storage (e.g., NVMe over Fabrics), and compute architecture (e.g., GPU topology) for specific AI workloads.

Local caching: Reduced latency for repeated training runs and fine-tuning jobs.

Custom scheduling: Full control over job prioritization, GPU reservation, and multi-user orchestration.

High Public Cloud TCO: Renting GPUs (e.g., A100, H100) in the public cloud is expensive long-term, especially for continuous inferencing workloads.

CapEx over OpEx: Once deployed, a private cluster avoids unpredictable monthly billing. Cost can be amortized over several years.

No egress fees: Avoid unpredictable charges in moving large model weights, datasets, and inference results in/out of public cloud

Ensure data never crosses jurisdictional boundaries, satisfying legal and policy-driven location requirements.

Stored and processed within specific geographic boundaries (data residency)

Kept under strict control to avoid unauthorized access, sharing, or exposure

Handled with full auditability for compliance verification and legal reporting

.jpg)

%20(1920%20x%20500%20px)%20(1920%20x%20300%20px).png)

%20(1800%20x%20700%20px)%20(1800%20x%20700%20px)%20(1800%20x%201800%20px)%20(1800%20x%201600%20px)%20(2).png)